Source India Today

NEW DELHI – In a major overhaul of digital safety regulations, the Indian government has notified significant amendments to the Information Technology Rules, 2026, mandating that social media platforms remove flagged deepfakes and harmful AI-generated content within a strict three-hour window.

The new regulations, issued by the Ministry of Electronics and Information Technology (MeitY) on February 10, 2026, represent a drastic shift from the previous 36-hour grace period, signaling a “zero-tolerance” approach toward synthetic misinformation.

Key Mandates of the New Policy

The updated framework introduces several rigorous requirements for “Significant Social Media Intermediaries” (platforms with over 5 million users):

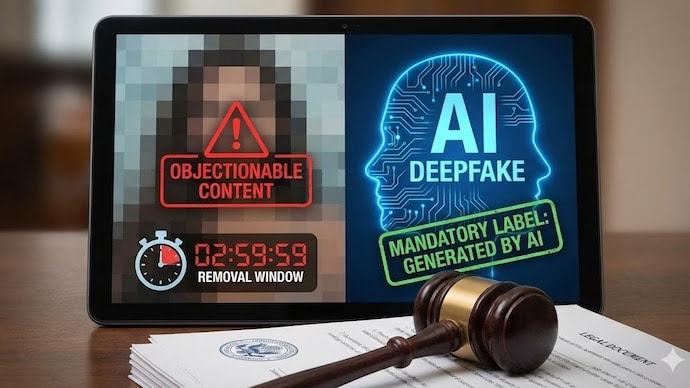

Accelerated Takedowns: Platforms must remove AI-generated content within 3 hours if flagged by a court or competent government authority. For non-consensual intimate imagery (NCII), the deadline is even tighter at 2 hours.

Mandatory AI Labeling: Any “Synthetically Generated Information” (SGI)—including audio, video, or images that appear authentic—must be prominently labeled.

Permanent Digital Fingerprints: Platforms are barred from allowing the removal or suppression of AI labels. They must also embed permanent metadata or unique identifiers to ensure the content remains traceable.

Proactive Detection: Companies are now required to deploy automated tools and “reasonable technical measures” to prevent the circulation of illegal, sexually exploitative, or deceptive AI content.

Defining “Synthetic Information”

To avoid legal ambiguity, the government has formally defined Synthetically Generated Information as any content created or altered using computer resources in a manner that makes it appear “real, authentic, or true.”

Exclusions: The rules provide a “good-faith” buffer. Routine editing, color correction, accessibility enhancements (like translations), and educational or research-based design work are excluded from these strict labeling requirements, provided they do not materially misrepresent the facts.

Enforcement and Compliance

The rules are set to come into force on February 20, 2026. Beyond technical barriers, platforms must now require users to declare whether the content they are uploading is AI-generated.

Failure to comply with these “due diligence” requirements could result in platforms losing their “Safe Harbour” protection—the legal immunity that prevents them from being held liable for content posted by their users.

Why the Change?

The move comes as India prepares to host a global AI summit and follows a surge in viral deepfakes targeting public figures and private citizens alike. By treating synthetic media on par with other “unlawful information,” the government aims to curb the viral spread of misinformation before it can cause real-world harm or civil unrest.